Network as Generator

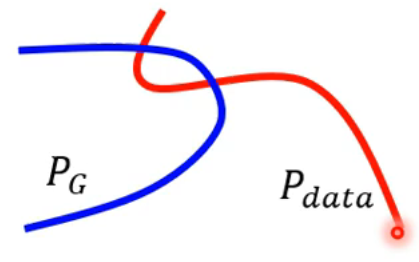

Compared to a traditional neural network, a generator network takes a simple distribution as input and outputs a distribution.

Why Output a Distribution?

When the same input may correspond to different outputs, the network should output a distribution; otherwise, the predictions may be inaccurate. Here is an example:

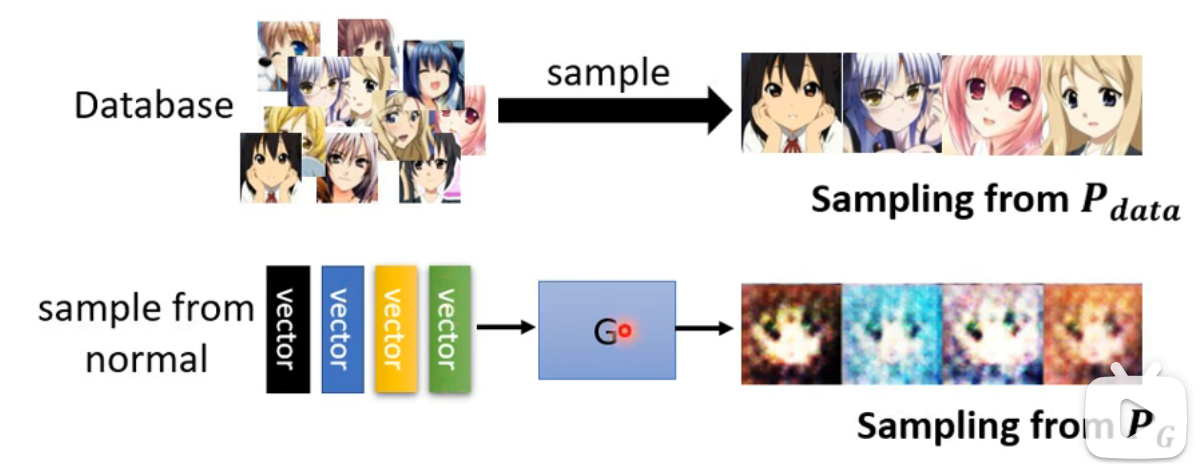

Generative Adversarial Networks (GANs)

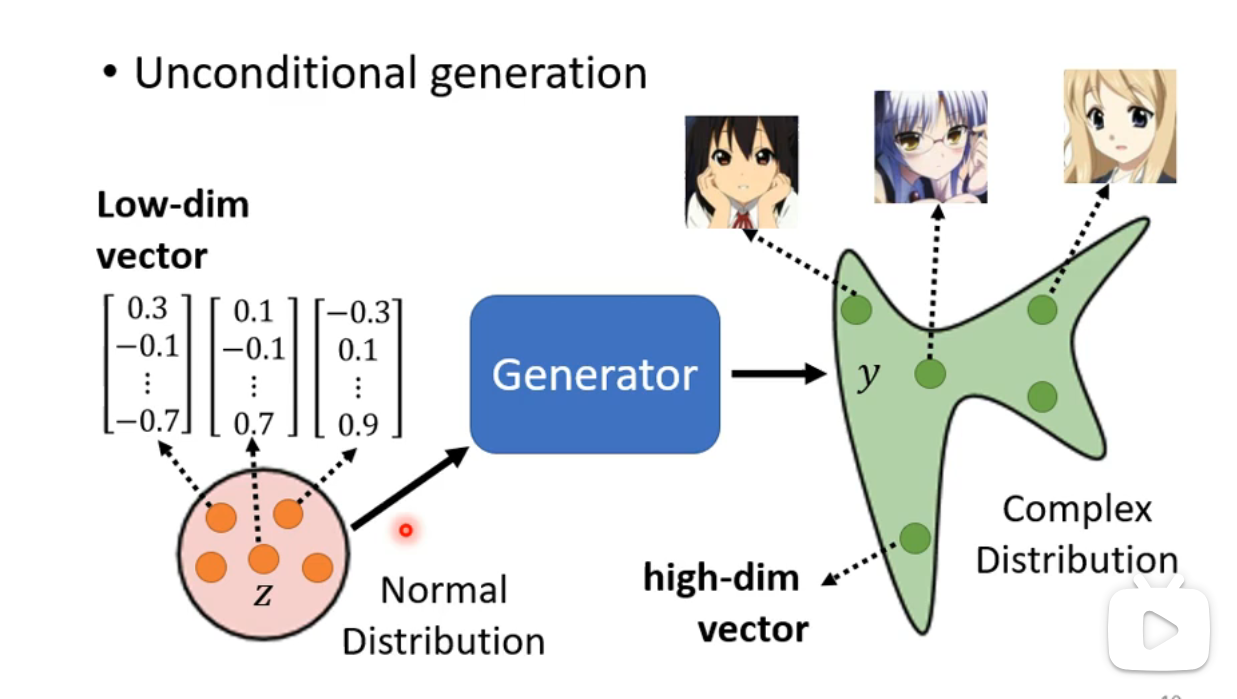

Anime Face Generation

- Unconditional generation: The generator's input does not have a specific

.

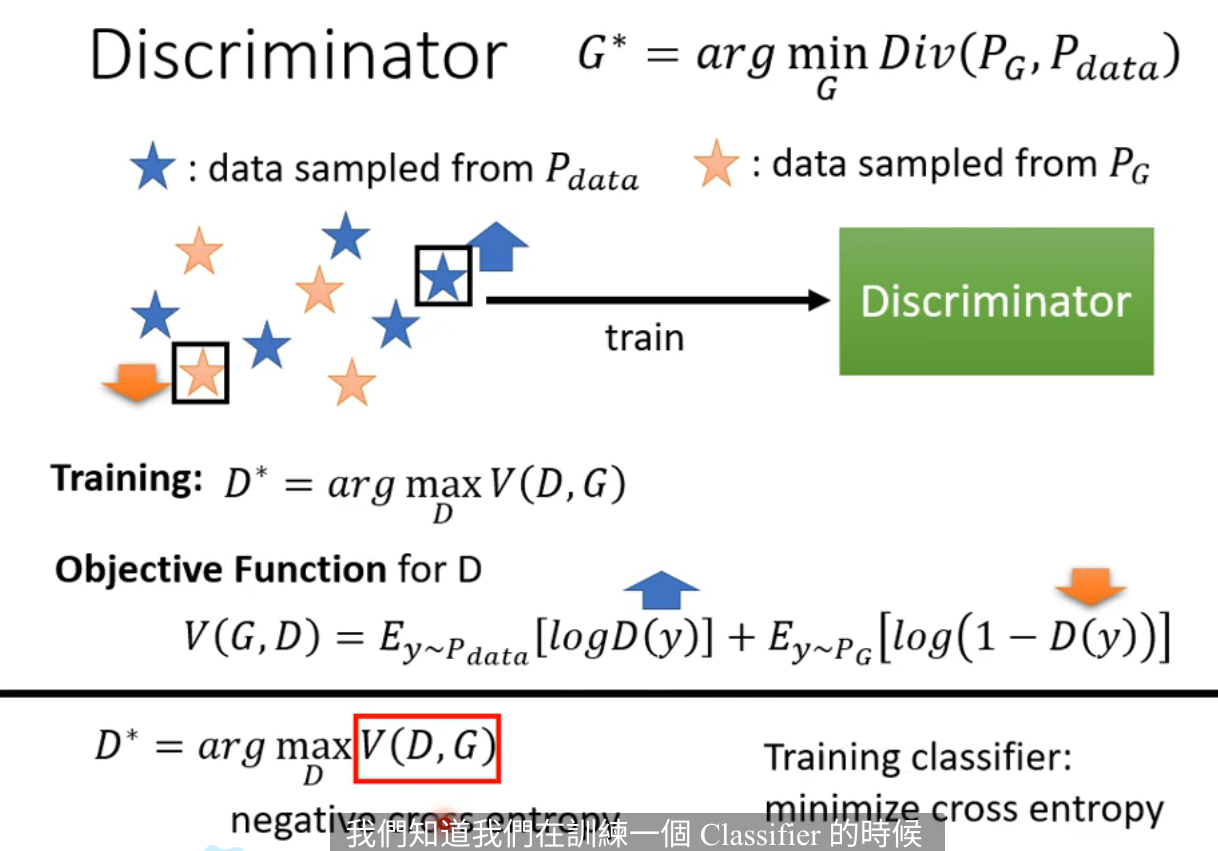

Discriminator

The Discriminator is a neural network that outputs a scalar value for each input, with higher values indicating real samples and lower values indicating fake ones. The architecture of the discriminator can be customized, but its goal is to produce a scalar that estimates the quality of generated images.

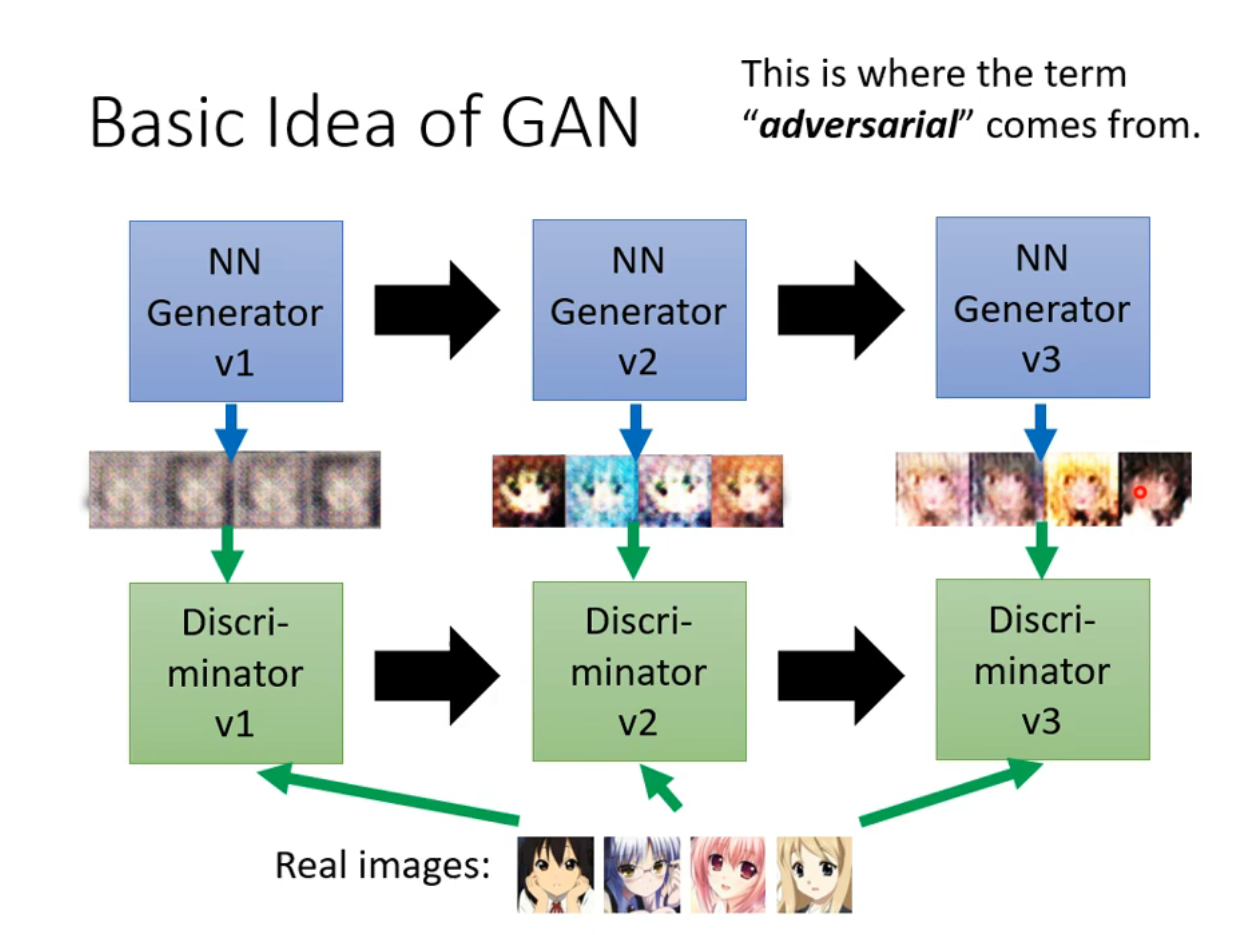

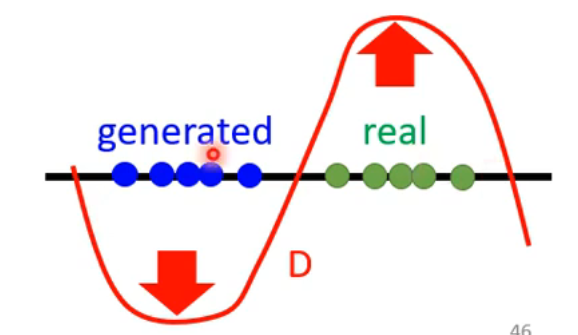

Basic Idea of GAN

The generator improves over time by learning from the discriminator’s feedback, and the discriminator’s standards become stricter as the generator improves. This adversarial process is the core of GANs.

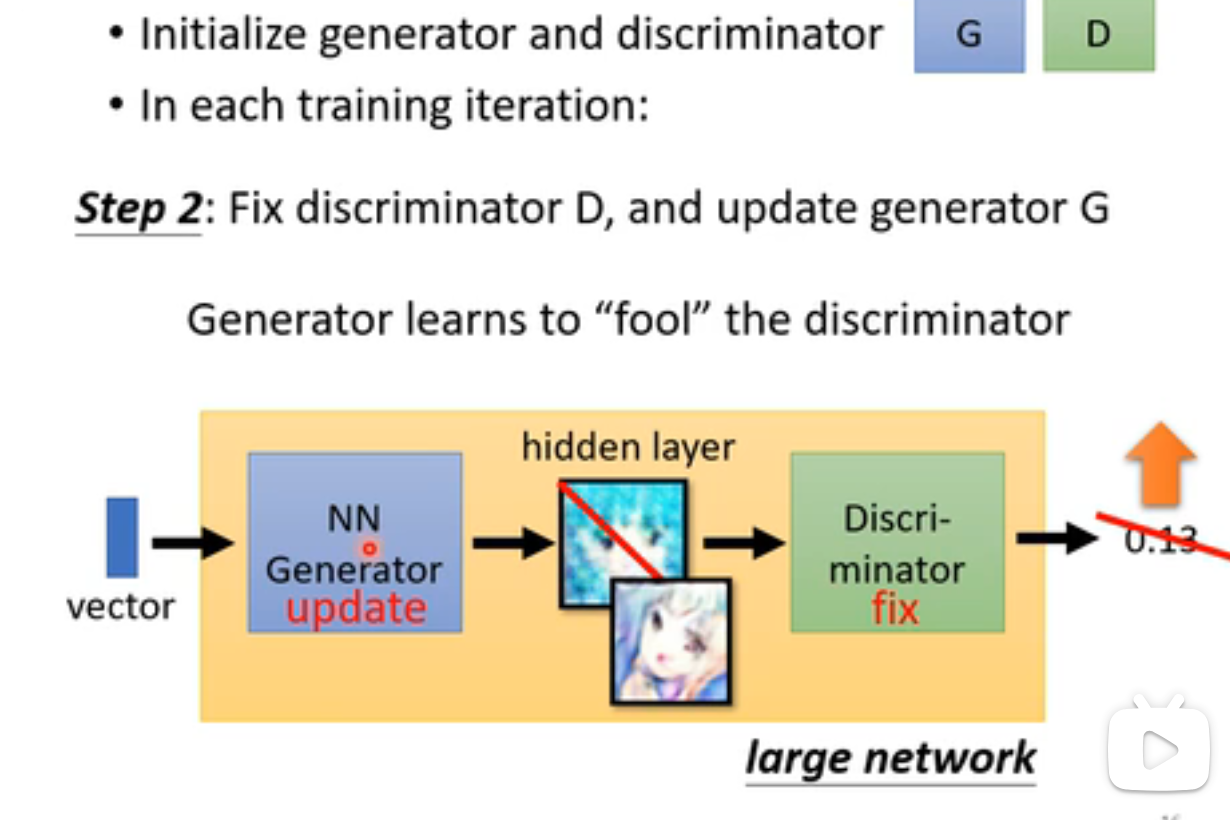

GAN Algorithm

- The discriminator learns to classify real versus generated objects.

- The generator learns to "fool" the discriminator.

- Fix the discriminator

while updating the generator .

- Fix the discriminator

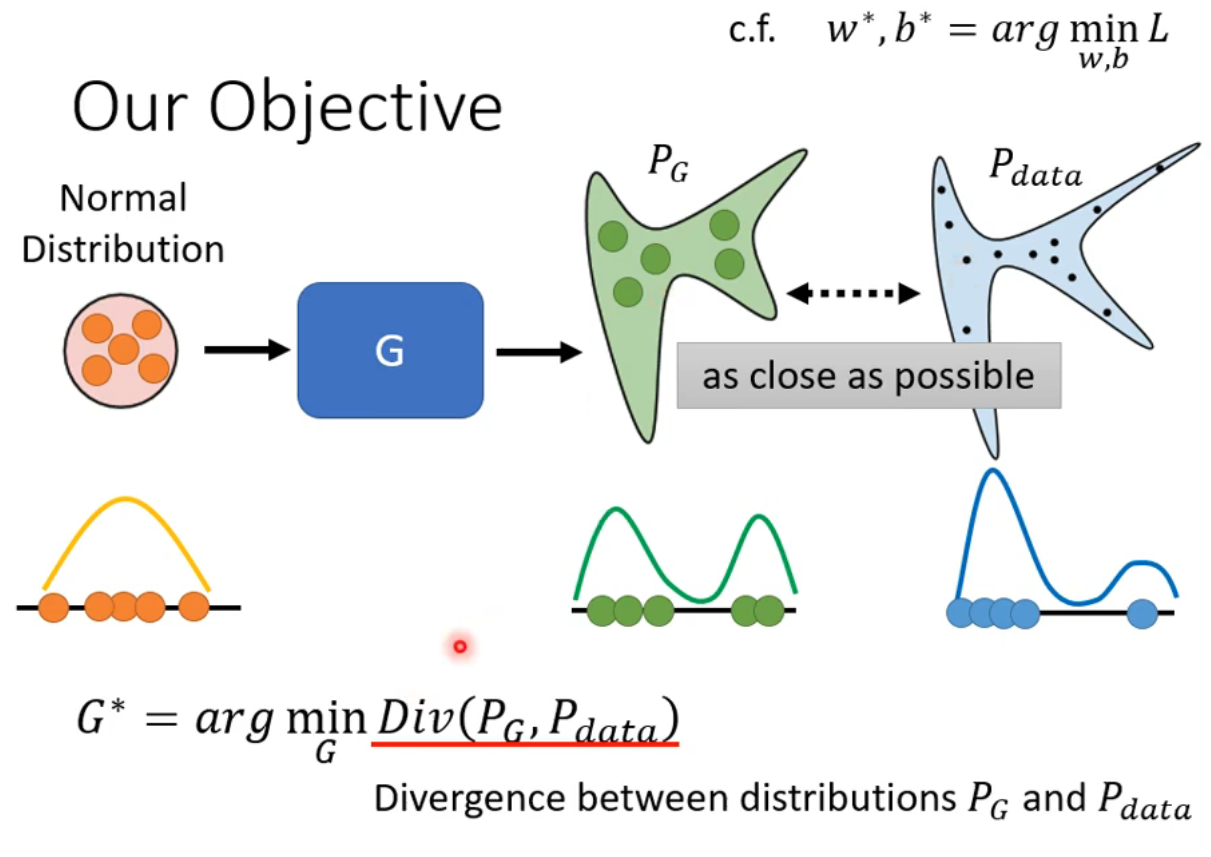

Theory Behind GAN

Let

The challenge is computing this divergence:

Although we don’t know the full distributions of

For the discriminator, it should assign high scores to samples from

Small divergence suggests it’s hard to discriminate between real and generated samples, while large divergence indicates a better discriminator. Our task is to minimize the divergence

Tips for GANs

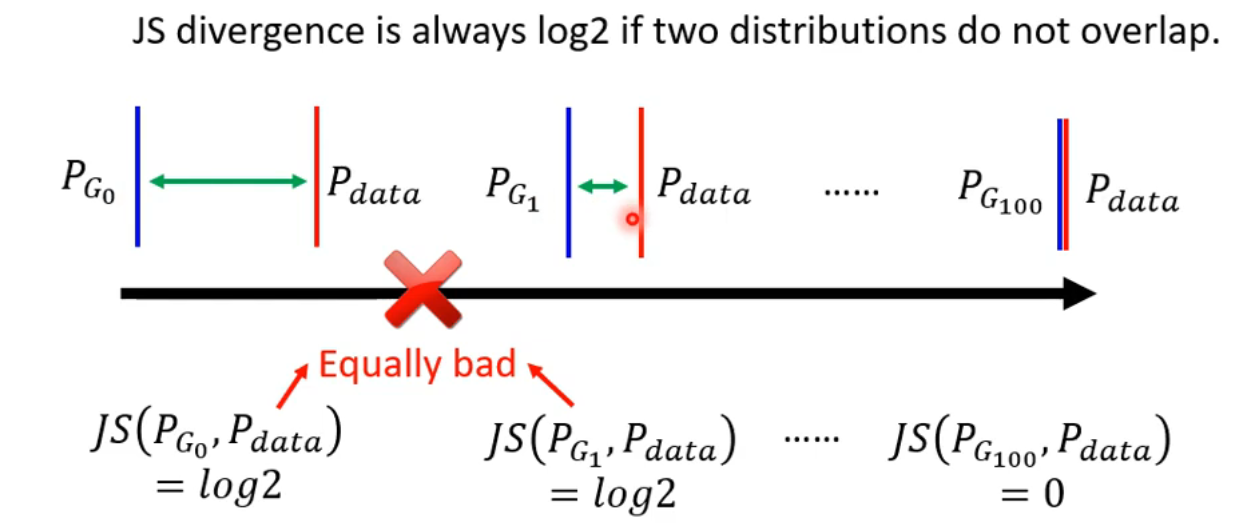

Problems of JS Divergence

In most cases,

Moreover, since we only sample from these distributions, they are unlikely to overlap even if the actual distributions do. With limited samples, JS divergence typically yields

In practice, this means the discriminator achieves 100% accuracy due to the lack of overlap, which doesn’t reflect improvements in the generator. Thus, JS divergence is not a reliable measure during GAN training.

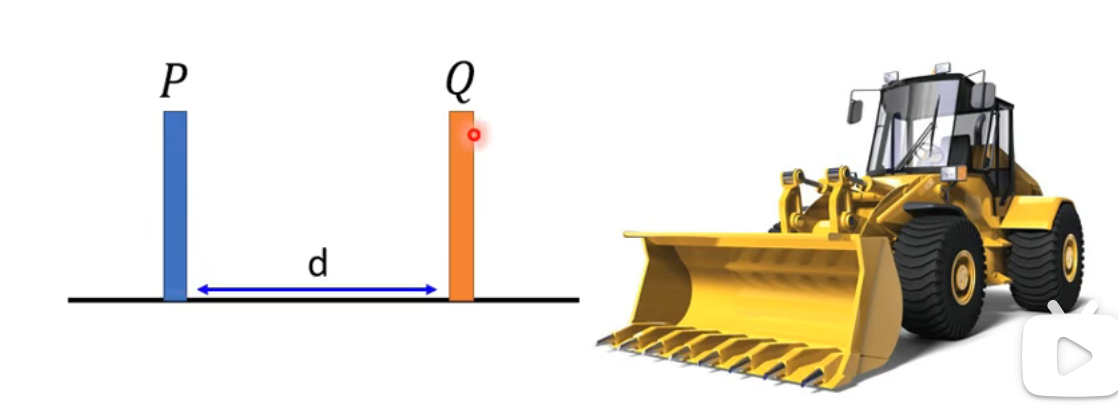

Wasserstein Distance

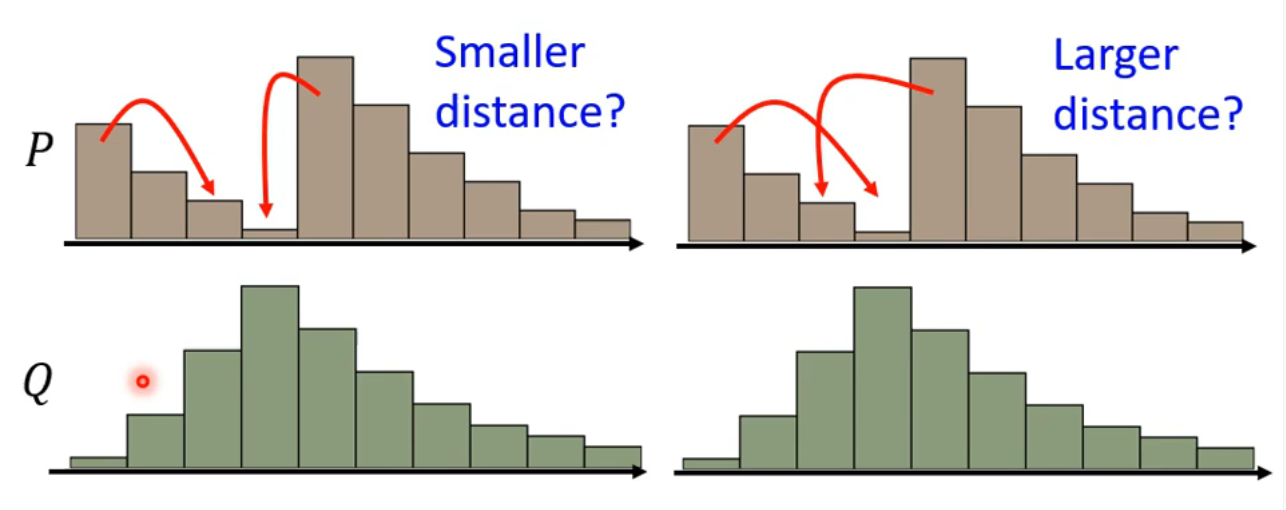

To improve upon JS divergence, consider Wasserstein distance. Think of one distribution as a pile of earth and the other as a target. The Wasserstein distance is the average distance that "earth" needs to move to match the target distribution.

Among all possible "moving plans," we use the one with the smallest average distance to define the Wasserstein distance, which decreases as the generator improves.

Wasserstein Distance and WGAN

We evaluate the Wasserstein distance by:

The function

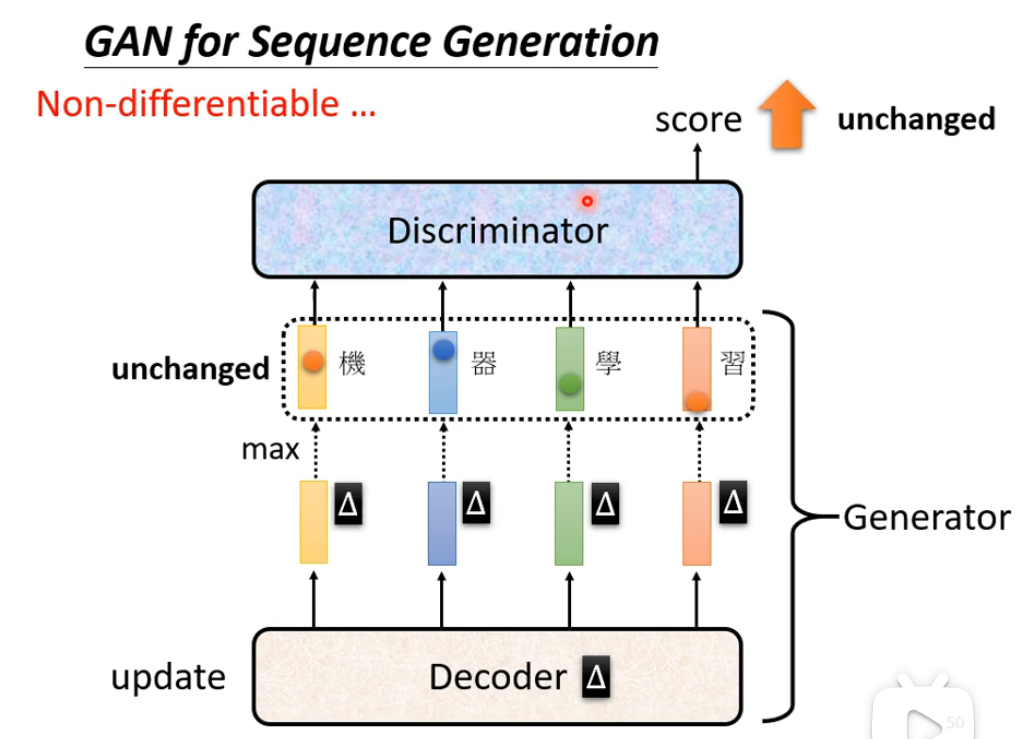

GAN for Sequence Generation

One major challenge for GANs is generating sequences. Changes in the decoder's parameters may not always produce different outputs, leading to non-differentiable results and unchanged scores from the discriminator.

Evaluation of Generation

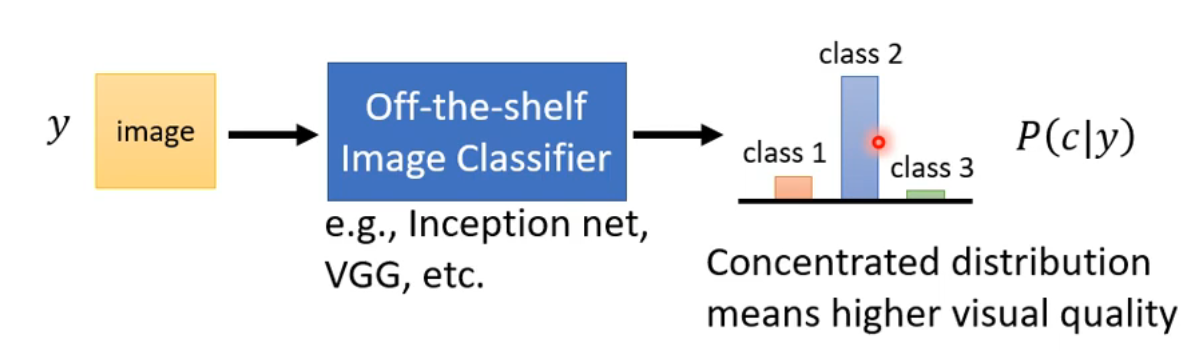

Quality of Generated Images

The task is to automatically evaluate the quality of generated images. One method is to use a pre-trained image classifier that takes an image as input and outputs

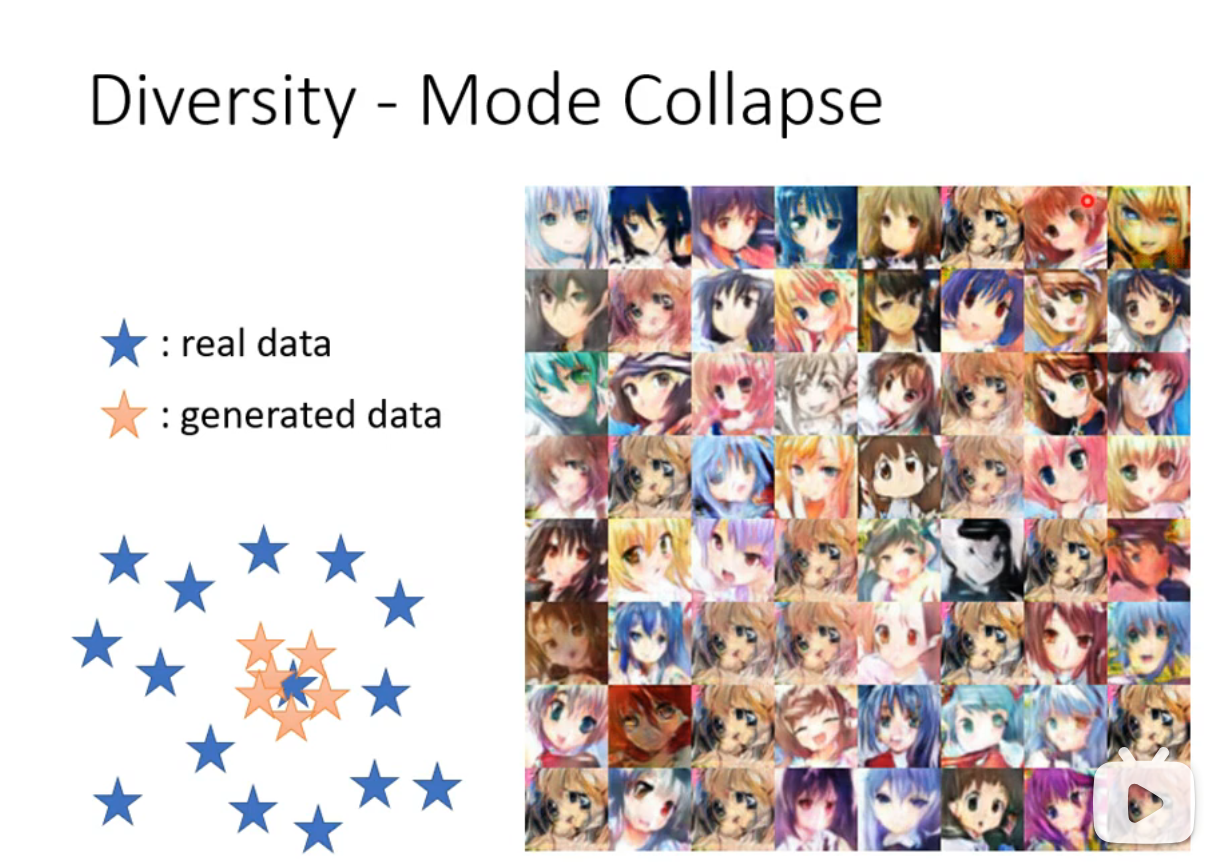

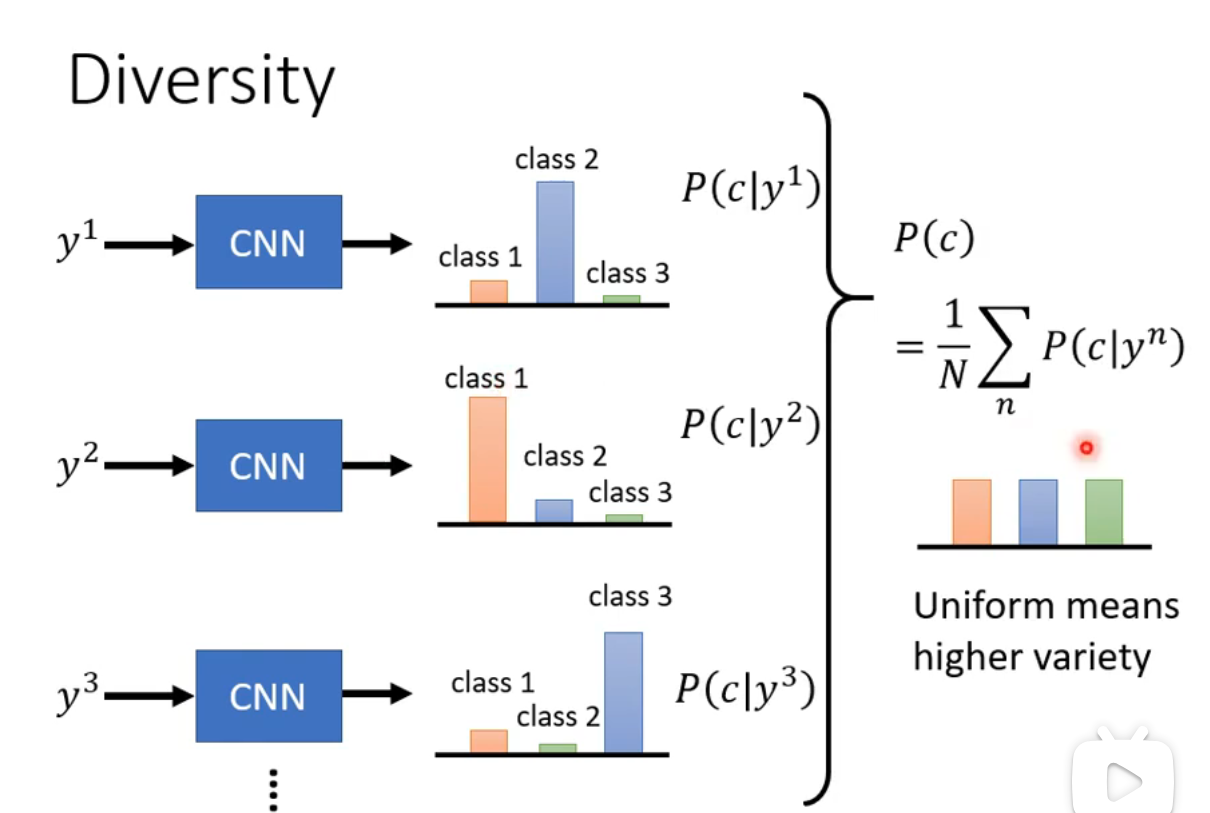

Diversity

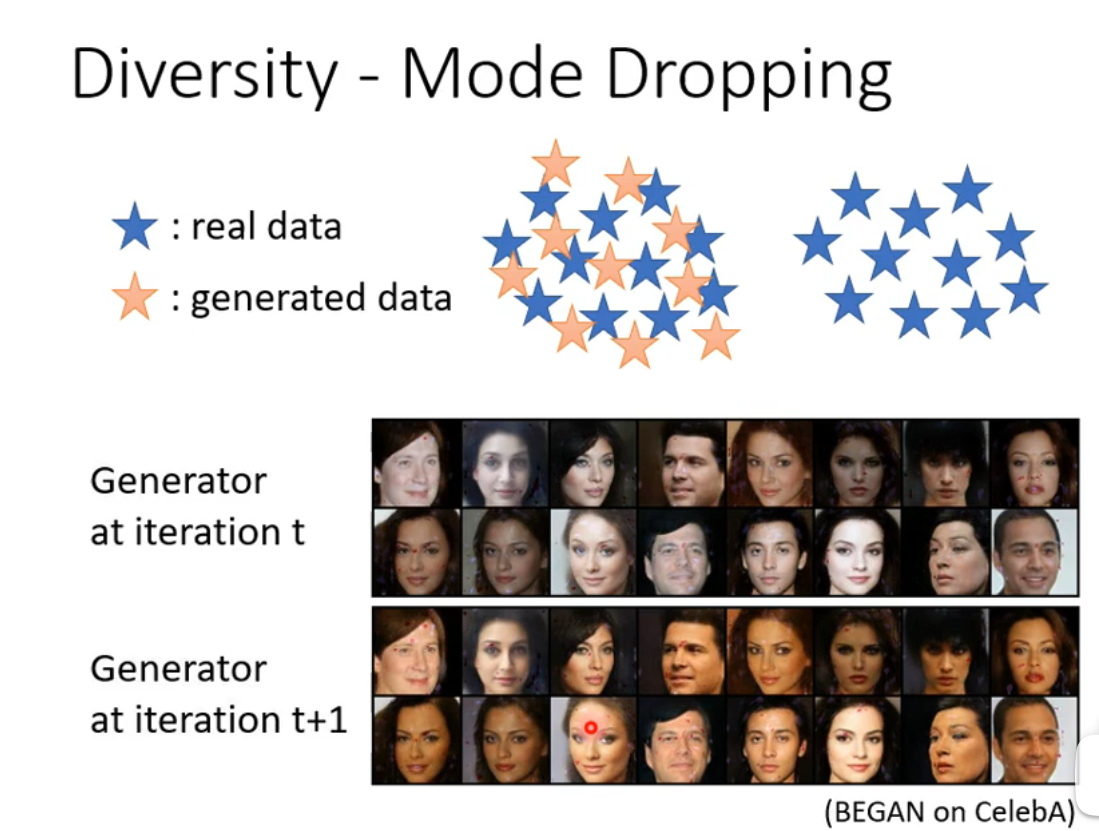

One key issue in GANs is Mode Collapse, where the generator produces high-quality images but only a few distinct types. It initially appears effective, but over time it becomes clear that the generator only outputs a limited set of images.

Another problem is Mode Dropping, where the generator cannot produce images outside of the training set. For example, it may only alter skin color over multiple iterations without creating new faces.

High quality and diversity yield a large Inception Score (IS). However, there’s often a trade-off between quality and diversity. To evaluate diversity, we assess multiple images, while quality is judged on a single image.

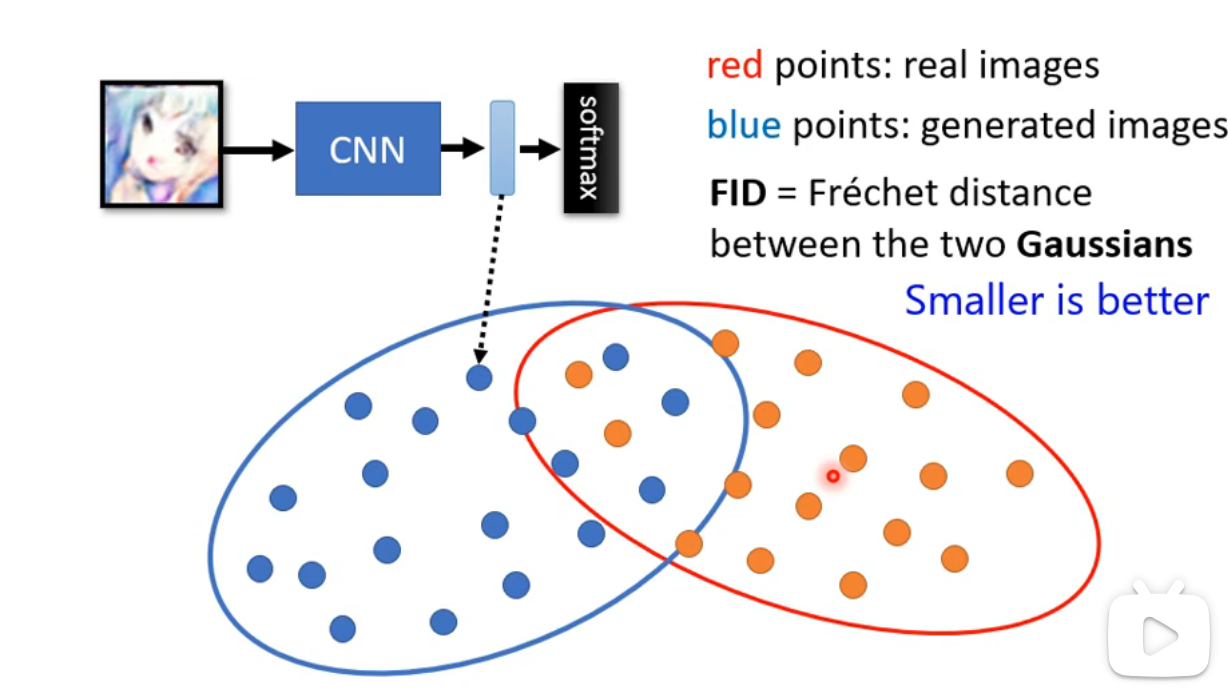

Frechet Inception Distance (FID)

Rather than using final outputs, we calculate FID based on the vector before the softmax layer.

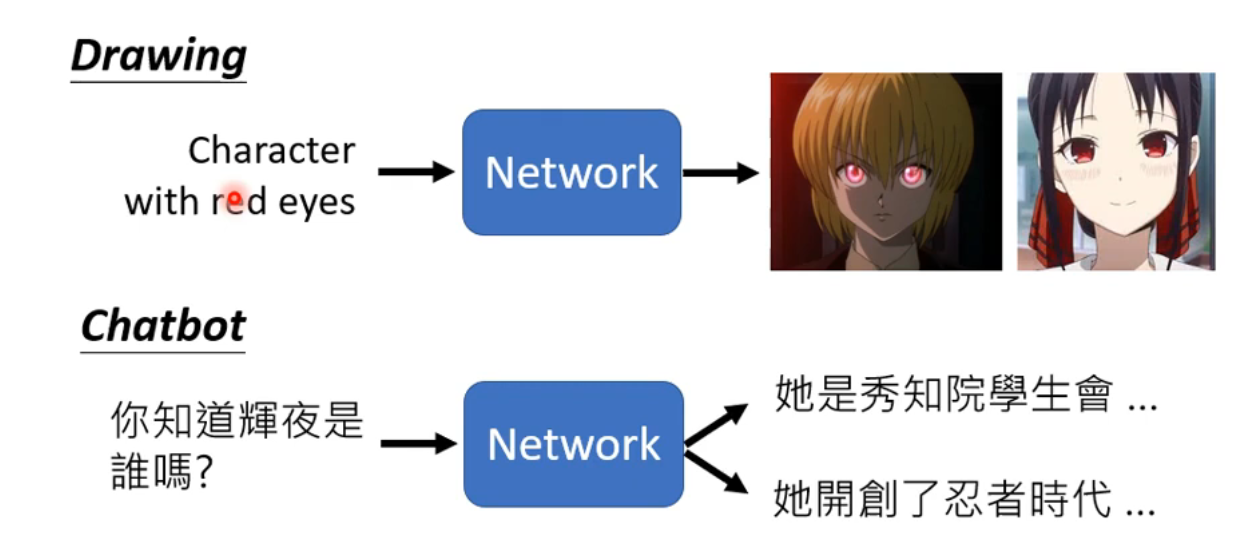

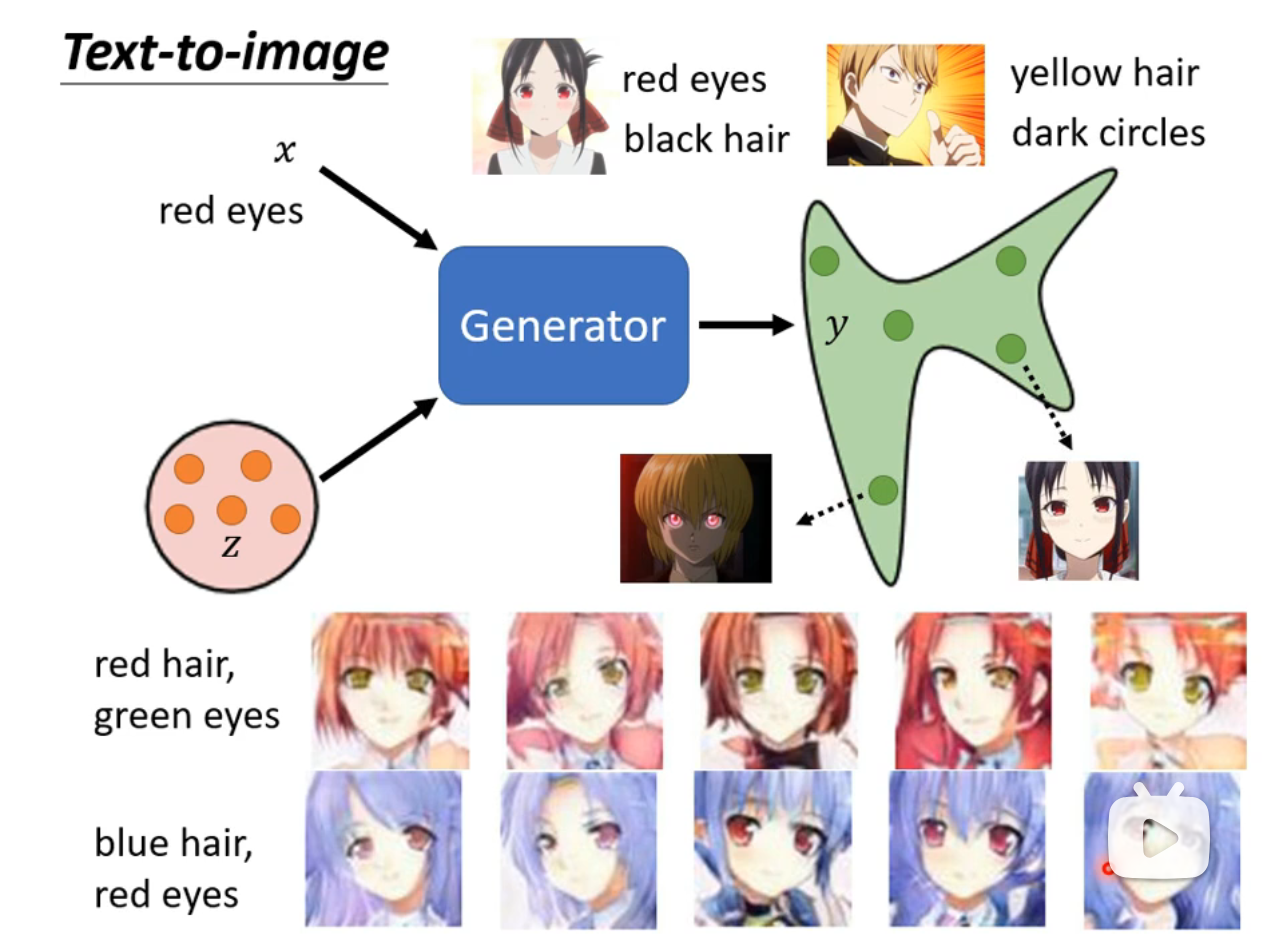

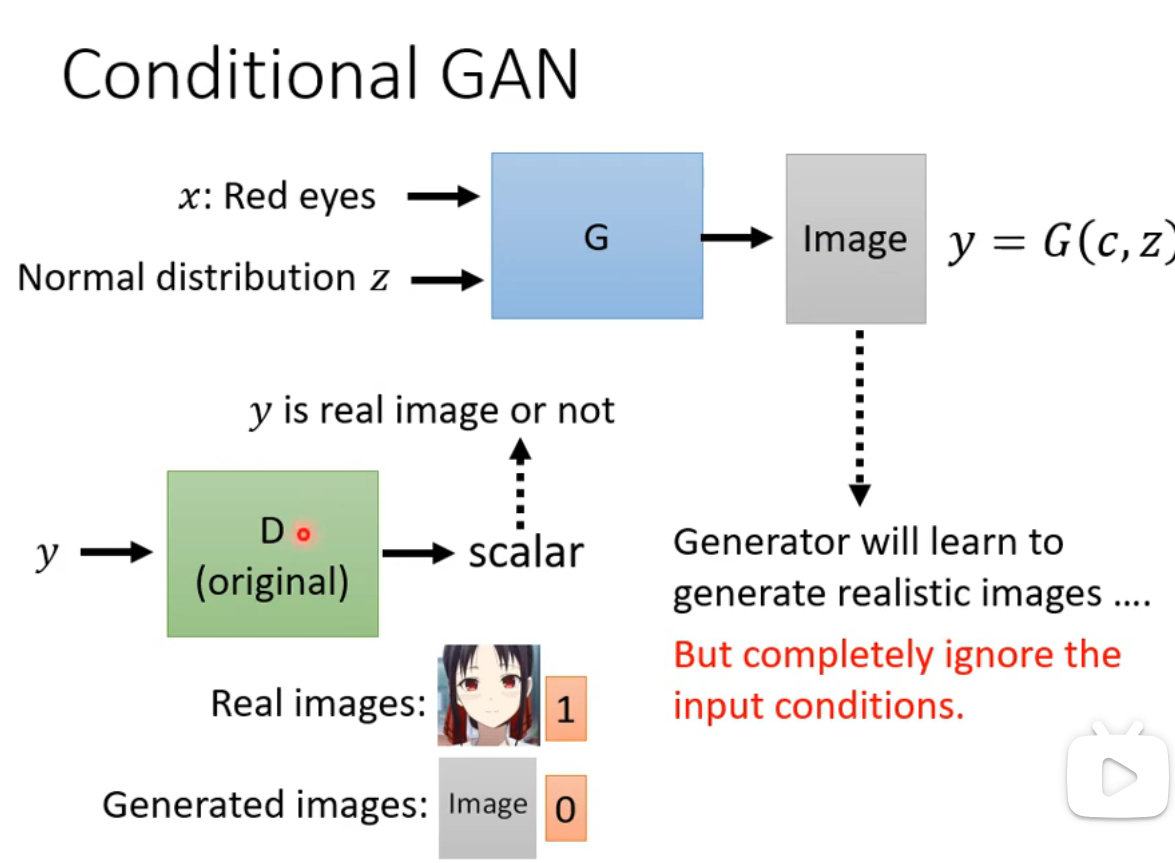

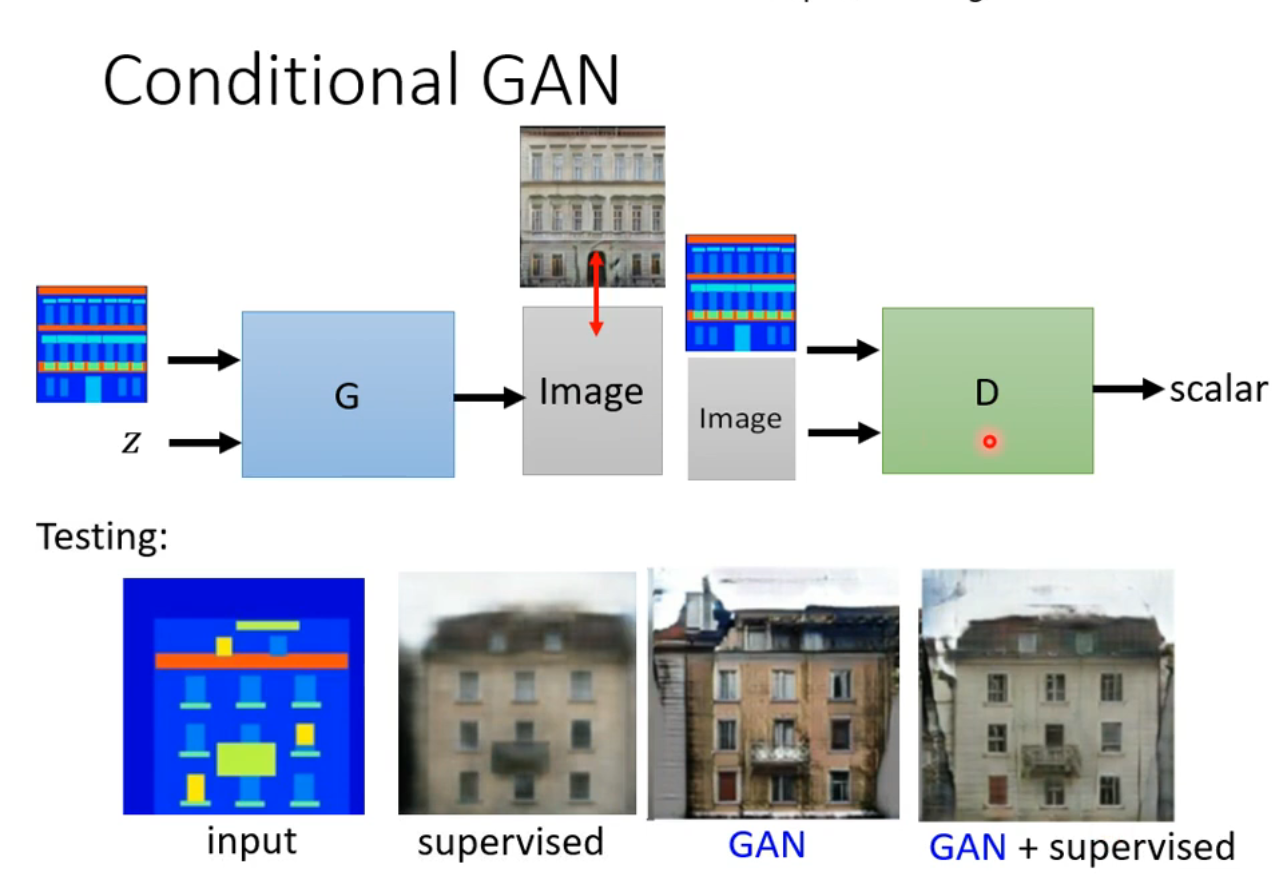

Conditional GAN

Conditional GAN: Given a condition

Example:

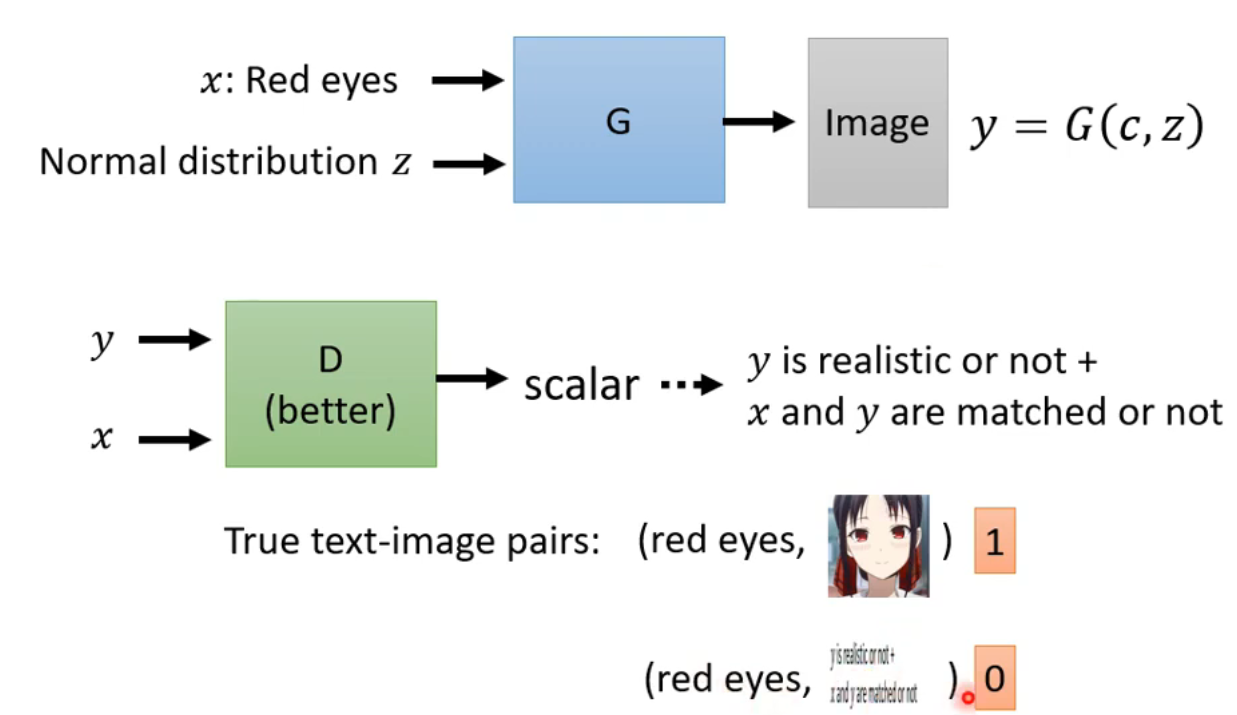

The discriminator should take both

This requires paired data so the discriminator can judge both the realism of

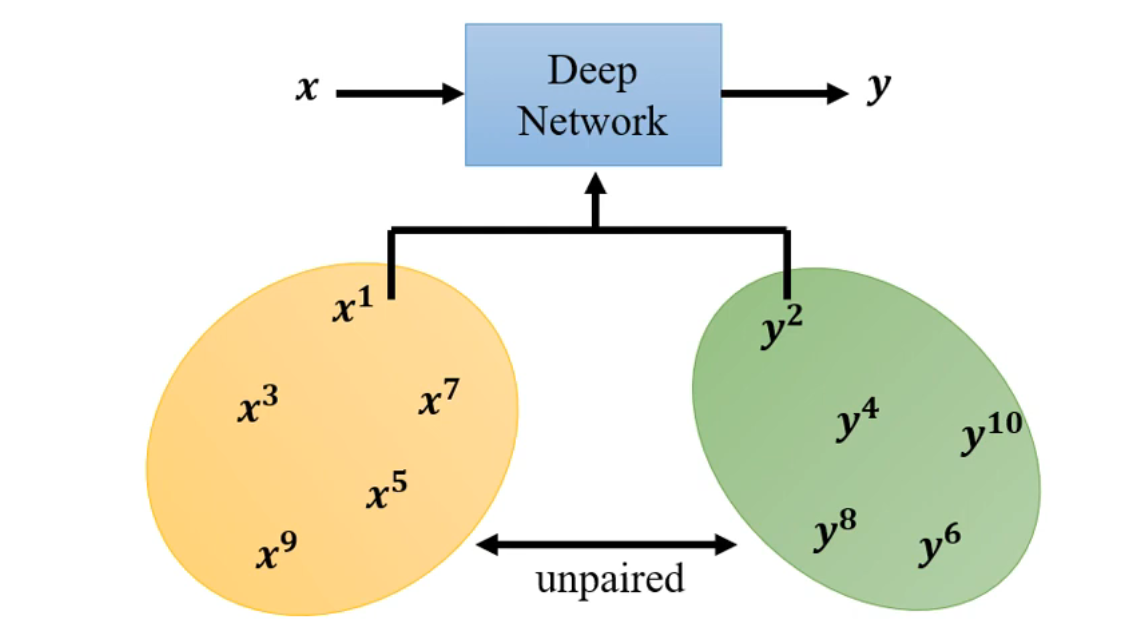

Learning from Unpaired Data

For tasks like image style transfer, GANs can help learn mappings without paired data.

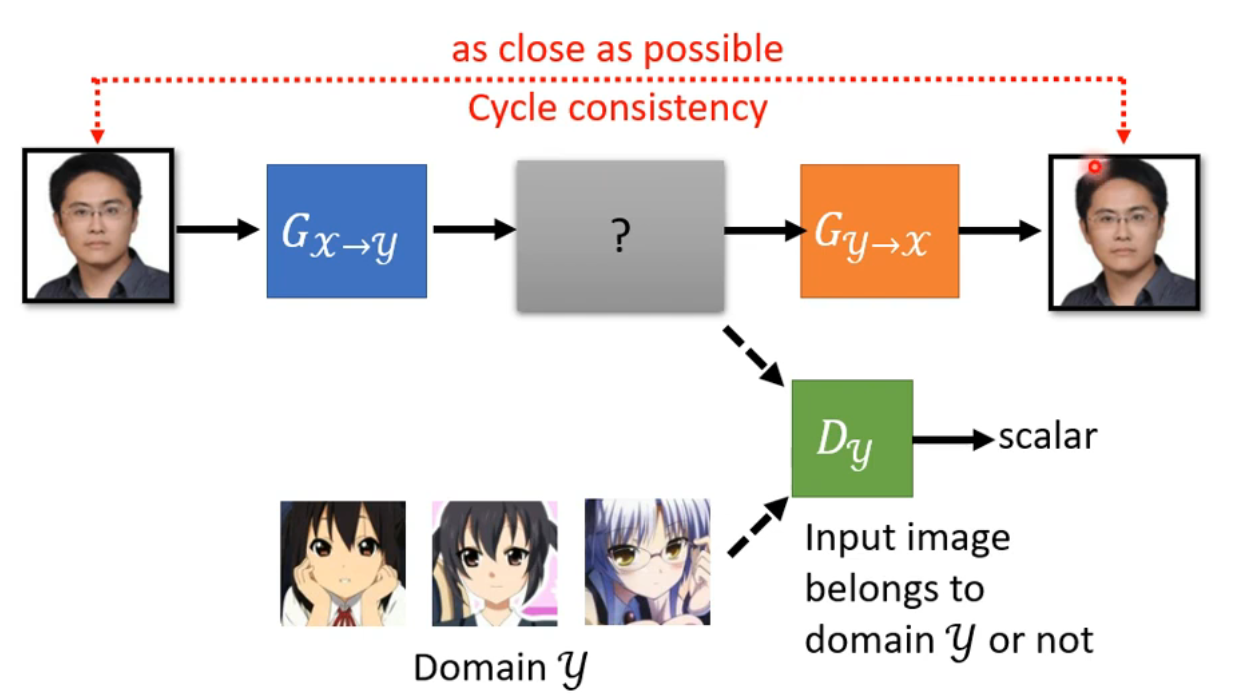

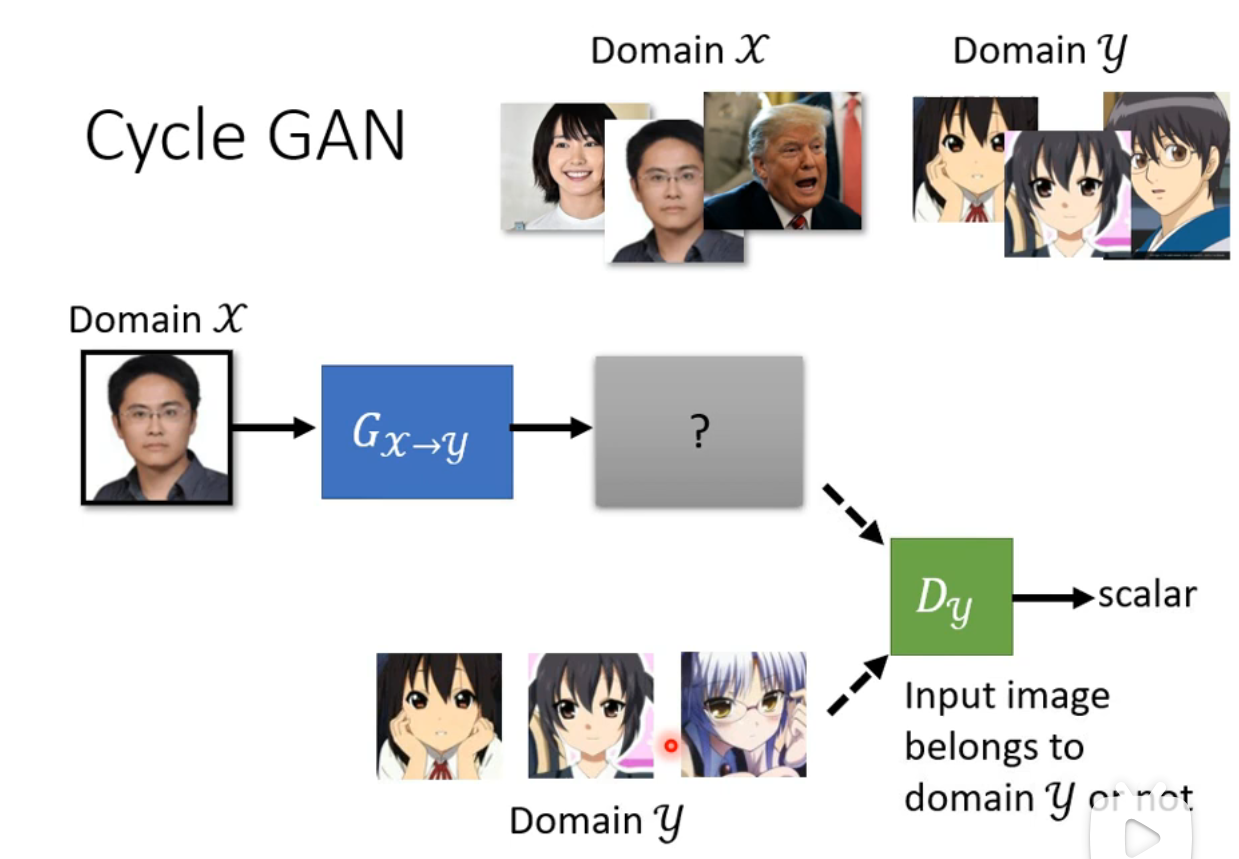

Cycle GAN

Cycle GANs aim to ensure the generator’s output relates to its input.

In a Cycle GAN, one generator transforms